You just trained a computer vision model for your ADAS or autonomous driving function.

Congratulations! Now what?

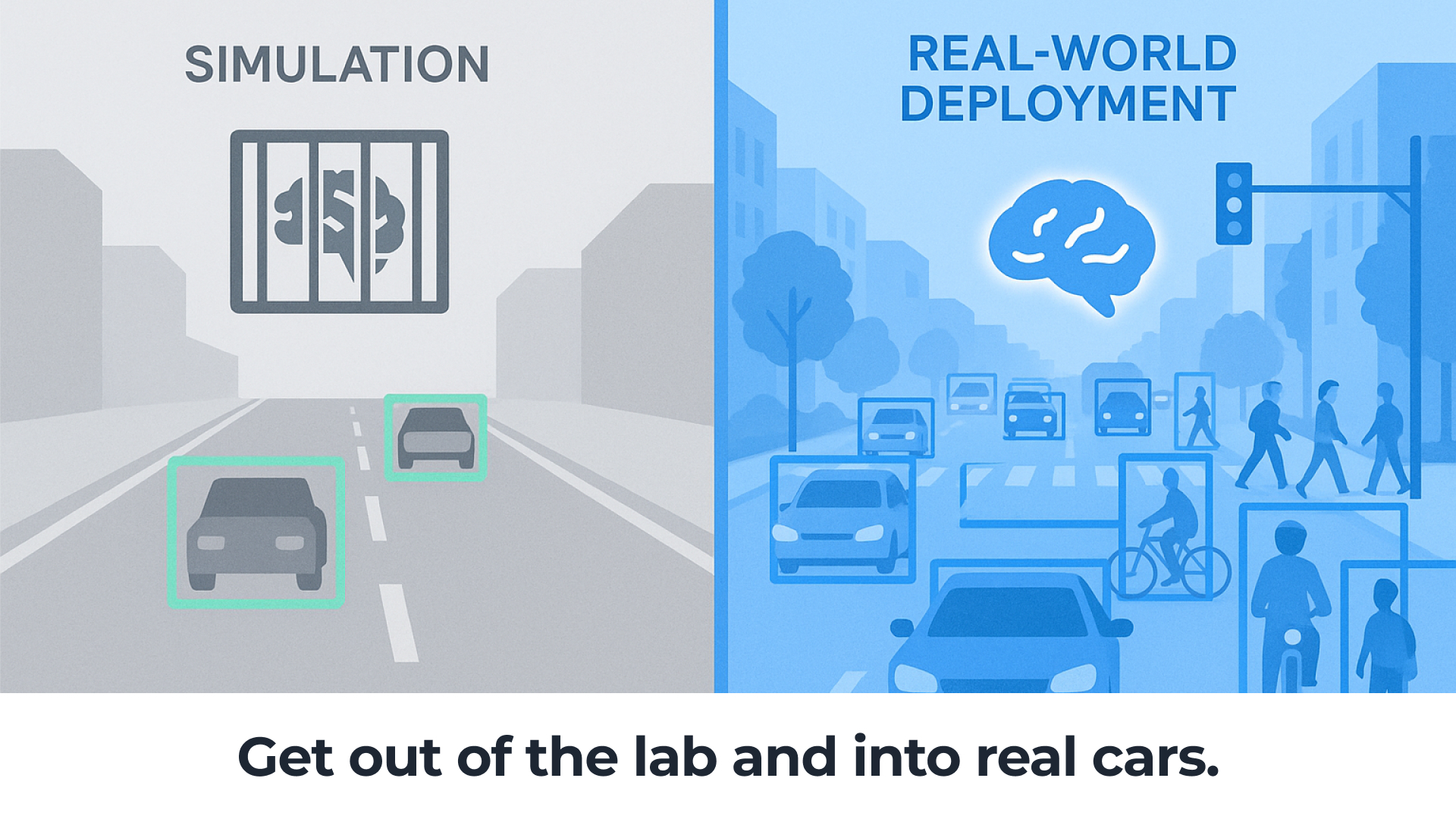

To refine your model, you’ll need to put it through its paces with millions of miles of real-world driving. But every automotive engineer in computer vision knows that moving from the lab to real-world validation is a pain. You have a few choices, and all of them have problems.

- Spin up a dedicated test fleet. A great option … if you have hundreds of thousands or even millions of dollars in your budget to retrofit vehicles and pay test drivers.

- Testing on production cars. Even if the over-the-air capabilities exist, there are so many stakeholders and process steps that you’ll wish you’d never asked.

- Simulated data. While relatively quick and cheap, will your project sponsor be convinced that it accurately captures real-world driving and all of its corner cases?

If you’ve ever struggled with this problem, it’s time to talk to Bee Maps. We’ve built the world’s largest network of purpose-built mapping machines, with tens of thousands of connected cameras in cars all around the globe running advanced computer vision models on the edge.

It’s simply the fastest, easiest and most economical way to start logging real-world miles for your computer vision model. Here are the main reasons you’ll be happy you discovered Bee Maps:

- Days, Not Months, to Real-World Testing With our OTA capabilities, you can push your computer vision models directly to thousands of cameras and start collecting data in as little as 24 hours — without hiring a single driver or making a single hardware change.

- Hardware Built for Precision Unlike consumer-grade devices and smartphone apps, our cameras are engineered specifically for mapping and CV workloads, offering high-precision localization, powerful edge compute and both monocular and stereo cameras to run your models effectively.

- Diverse Driving Environments Around the World Tap into tens of thousands of cameras operating in urban centers, rural roads, and highway corridors across all regions of the world. No more geographic blind spots or limited datasets—Bee Maps unlocks coverage in every operating domain.

It’s time to combine the fidelity of on-device inference with the flexibility of cloud-native data annotation and AI training tools. By avoiding the hassle of deploying devices into the world, you can focus on faster iteration for a software-defined future.

Contact us today and learn how we can help you go from prototype to ground truth. Fast.

Follow us on X or Try Bee Maps for Free.