The Network is Buzzing

The World Model Bottleneck Nobody's Talking About

Everyone's racing to build world models.

- NVIDIA released Cosmos.

- Wayve just got deals with Uber and Nissan.

- Tesla's been training on their incredible fleet data for years.

- OpenAI is building their own

- And many startups.

The models will keep getting better. That's not the question.

The question is: where does the training data come from?

The Physical World Isn't the Web

LLMs got to where they are because the internet handed them trillions of tokens. Every blog post, every Wikipedia article, every Reddit thread, every book ever scanned — it was all just sitting there, waiting to be ingested.

The physical world doesn't work like that.

There's no "Common Crawl" for driving data. No open source visual archive of every road, every intersection, every weather condition that refreshes each day. The real world isn't visually indexed and refreshed.

This is the bottleneck.

World models will continue to improve. The architecture breakthroughs will keep coming. Compute will keep scaling. Training techniques will get more efficient. None of it matters without data. And unlike the web, physical world data doesn't accumulate passively. It doesn't sit on servers waiting to be scraped. Someone has to go out and collect it. Every single day.

GPUs Are Table Stakes. Data Is the Bottleneck.

NVIDIA announced "Physical AI Open Datasets" with 1,700 hours of driving data. It's a meaningful contribution to the field.

But let's be honest about the scale of what's needed.

1,700 hours is one dashcam running for 70 days. The diversity of conditions, geographies, and edge cases in that dataset is inherently limited. Not because NVIDIA didn't try — but because collecting physical world data at scale is genuinely hard.

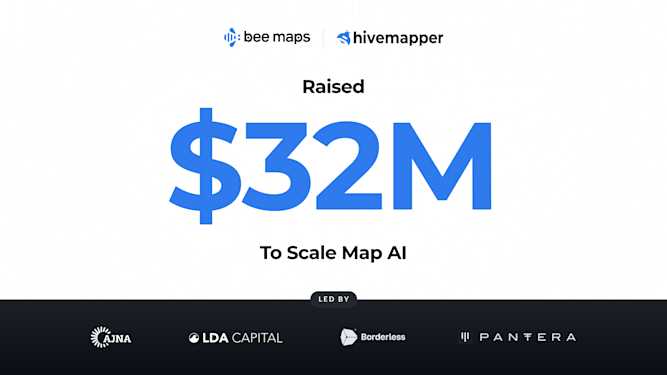

This is where Bee Maps fits in. Our global fleet has mapped 37% of the world's roads. We capture vast amounts of real-world driving video — not simulation, not test routes, but actual roads in actual conditions.

More importantly, we're capturing the moments that matter most for training: harsh braking events, swerving, high g-force moments, near-misses. The edge cases that world models need to understand but rarely see in curated datasets.

Video Alone Is Not Enough

Here's where I think most world model training pipelines will hit a wall: they treat driving data as a video problem.

It's not. It's a sensor fusion problem.

A 10-second clip of a car swerving is useful. That same clip with synchronized telemetry — speed at each frame, g-force profile, GPS coordinates, heading — is training data.

World models need to understand physics, not just pixels. They need to learn that a 0.4g lateral acceleration on wet pavement means something different than the same force on dry concrete. They need the ground truth that only comes from real sensors on real roads.

Our AI Event Videos API doesn't just return video clips. Each event includes:

- High-resolution GNSS data (position, altitude, timestamp at 30Hz)

- IMU sensor streams (accelerometer, gyroscope)

- Event classification (harsh braking, swerving, speeding, aggressive acceleration)

- Speed profiles throughout the event

- Geographic and temporal metadata

This is what supervised learning actually requires. Labeled, sensor-rich, geographically diverse training data at scale.

The Events Video API Is Live

If you're working on world models and need real-world driving data, we built this for you:

Query by event type. Filter by geography. Specify date ranges. Get back video URLs with full sensor context.

Pull the raw telemetry. Train on real physics.

The model architectures will keep improving. The compute will keep scaling. But the teams that pull ahead will be the ones who solved the data supply chain first.

That's the bottleneck we're working on.