About Us

Physical AI requires something the internet can't provide: fresh, structured data about the real world. We built the full stack — from the sensor to the API — to deliver it.

Our Scale

The Problem

Why data for Physical AI is so hard

Training a language model is hard, but the internet gives you a corpus. Physical AI is a fundamentally different problem: the data doesn't exist until someone drives every street, walks every path, processes every frame, and delivers it as structured, geo-referenced ground truth.

There is no “internet of the physical world” to scrape. The real world changes constantly — a one-time mapping pass is never enough.

Most companies attempting this either build expensive first-party fleets that can't scale, or rely on commodity cameras that can't produce the quality. We took a different approach: purpose-built hardware deployed at massive distributed scale, with a software stack designed from day one to close the loop from capture to API.

Our Hardware

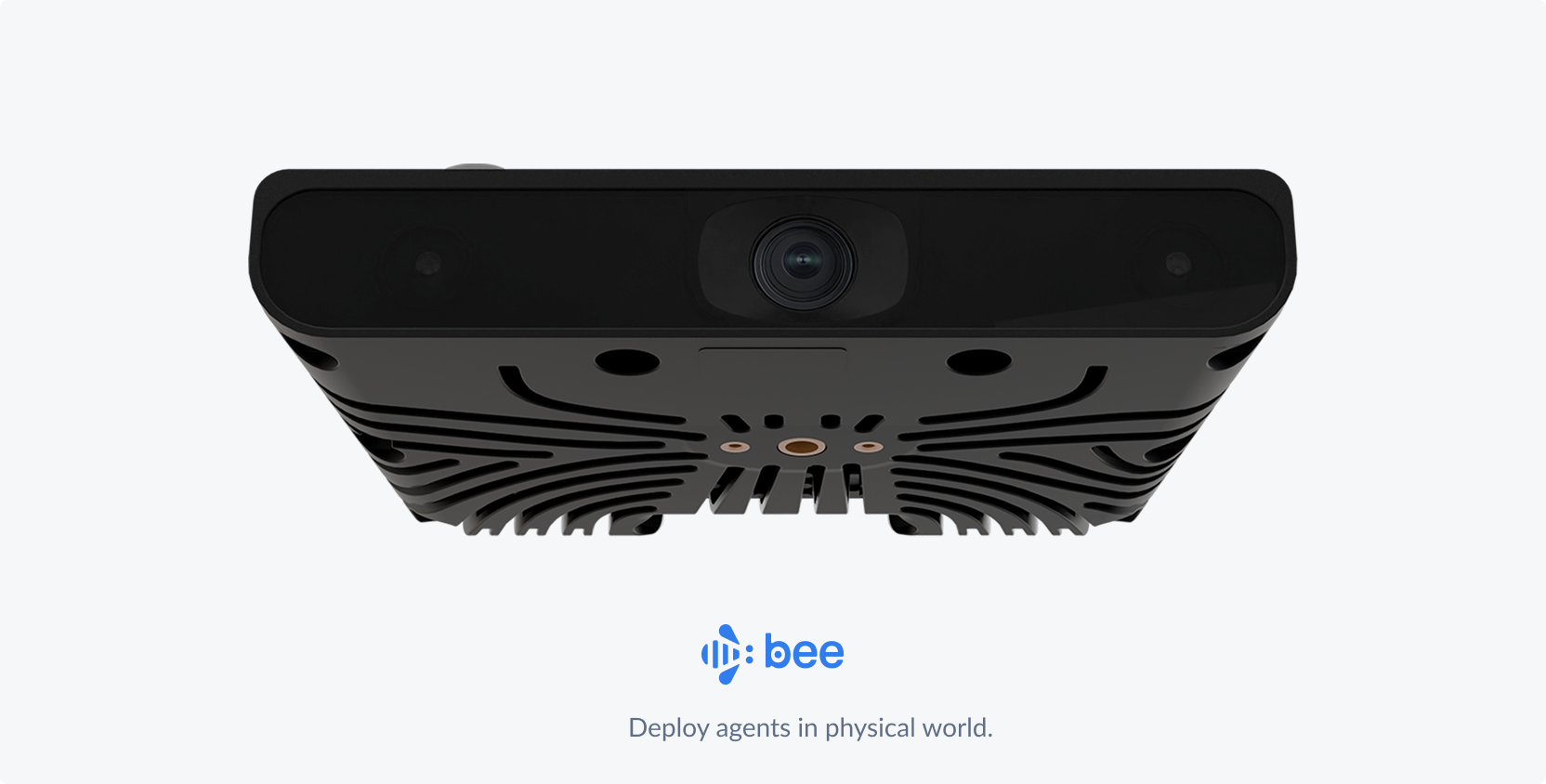

The Bee

If you want the right data, you have to build the right instrument. The Bee is a programmable sensor platform designed from scratch to capture and structure data from the physical world.

The hard part of building a sensor isn't any single component — it's getting all of them to work together in conditions you didn't anticipate. We chose every element of this device — optics, IMU, GPS, onboard compute — to serve one objective: maximizing the quality of structured spatial data under real-world constraints.

This is our fourth generation. Each version was shaped by what the previous one taught us — billions of images worth of failure modes that don't show up in simulation.

Validated the distributed collection model with off-the-shelf sensors

Purpose-built lens and sensor stack for mapping-grade imagery

On-device ML for real-time frame scoring and selective upload

LTE connectivity, advanced IMU fusion, and hardened enclosure for global deployment

The Platform

From lens to API

We built every layer of the technology stack — from the software running on cameras in contributors' vehicles to the APIs that serve data for physical AI use cases and beyond.

Products