The Network is Buzzing

Query Rare Driving Event Videos. Skip 50,000 Hours of Video

Training world models on driving data? You've got a curation problem.

Greater than 90% of dashcam video footage is nothing. A car driving straight. Stop signs where everyone stops. Lane changes that go fine. The moments that actually matter for autonomy, safety, simulation—hard braking, swerving, stop sign violations, tailgating—are buried in terabytes of boring.

The AI Event Videos API flips the model. We run detection at the edge, across hundreds of millions or road km mapped by Bee cameras deployed globally, and surface only the action-rich clips. You don't download hours hoping to find a near-miss. You query directly for "harsh braking events in San Francisco from last month" and get back exactly what you need.

What You Actually Get

Each event includes:

- Event type + timestamp— HARSH_BRAKING, SWERVING, STOP_SIGN_VIOLATION, etc.

- GPS location— precise lat/lon

- Metadata— acceleration values, speed history, firmware version

- Video URL— signed, temporary link to the clip

- Optional: frame-level GNSS + IMU— this is the killer feature for serious ML work

That last one deserves emphasis. When you're doing trajectory reconstruction, path alignment, or learning motion-conditioned representations, event-level metadata isn't enough. You need per-frame sensor data aligned to video.

Pass

?includeGnssData=true&includeImuData=true and you get:- GPS array (lat, lon, altitude, timestamp per frame)

- IMU array (accelerometer + gyroscope readings with timestamps)

This adds 50-200 KB per response—so don't request it for every event. Search first, shortlist, then expand the ones you're actually training on.

The Event Types

Eight categories, all AI-detected at the edge with more coming soon.

Type | What It Captures |

HARSH_BRAKING | Hard deceleration events |

AGGRESSIVE_ACCELERATION | Rapid speed increases |

SWERVING | Sudden lateral movement |

HIGH_SPEED | Exceeding speed thresholds |

HIGH_G_FORCE | High lateral/longitudinal forces |

STOP_SIGN_VIOLATION | Failure to stop |

TRAFFIC_LIGHT_VIOLATION | Red light runs |

TAILGATING | Unsafe following distance |

These aren't hand-labeled. They're computed from sensor data in real-time, then indexed for search. Here are a few examples (In GIF format for easier consumption)

Two Endpoints, Simple Workflow

1. Search (

POST /aievents/search)Define your dataset recipe: time window, event types, geographic polygon. See full API specs here.

const response = await fetch('https://beemaps.com/api/developer/aievents/search', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': 'YOUR_API_KEY'

},

body: JSON.stringify({

startDate: '2026-01-01T00:00:00.000Z',

endDate: '2026-01-21T23:59:59.000Z',

types: ['HARSH_BRAKING', 'SWERVING'],

polygon: [

{ 0: -122.5, 1: 37.5 },

{ 0: -122.0, 1: 37.5 },

{ 0: -122.0, 1: 38.0 },

{ 0: -122.5, 1: 38.0 },

{ 0: -122.5, 1: 37.5 }

],

limit: 100,

offset: 0

})

})Constraints: max 31-day range, max 500 results per request. Paginate with

limit/offset.2. Get by ID (

GET /aievents/{id})Fetch full details + video URL for specific events. See complete API specs here.

// Basic fetchconst event = await fetch( 'https://beemaps.com/api/developer/aievents/697100eef68342b197b78418', { headers: { 'Authorization': 'YOUR_API_KEY' } })

// With sensor expansionconst eventWithSensors = await fetch( 'https://beemaps.com/api/developer/aievents/697100eef68342b197b78418?includeGnssData=true&includeImuData=true', { headers: { 'Authorization': 'YOUR_API_KEY' } })Example of an app displaying a video with full IMU and GNSS data for the entirety of this drivepath.

What This Unlocks

World model training. Video paired with ego-vehicle signals—speed, steering proxy, acceleration—for action-conditioned generation. The data bottleneck for world models isn't compute, it's fresh, diverse, sensor-rich driving video. We have it.

Edge case mining. Near-misses, emergency maneuvers, unusual interactions. The stuff that's expensive to stage and time-consuming to find in raw footage. Query it directly.

Behavior cloning datasets. Real driver reactions to real stimuli with ground-truth control proxies from sensor data.

Simulation validation. Benchmark your synthetic scenarios against authentic real-world events.

The Pattern That Works

- Search in rolling windows (weekly/monthly)

- Paginate through results (limit/offset)

- Store event IDs + basic metadata locally

- Shortlist candidates for training

- Fetch details + sensor expansion only for shortlisted items

- Download video clips

Don't call

includeGnssData=true or includeImuData=true on every search result. That's wasteful. Filter first, expand later.Why This Exists

Google and Apple give you static imagery—digital museums that age by the day. Mobileye collects metadata but keeps it locked to their chips. Tesla has great data but it's closed.

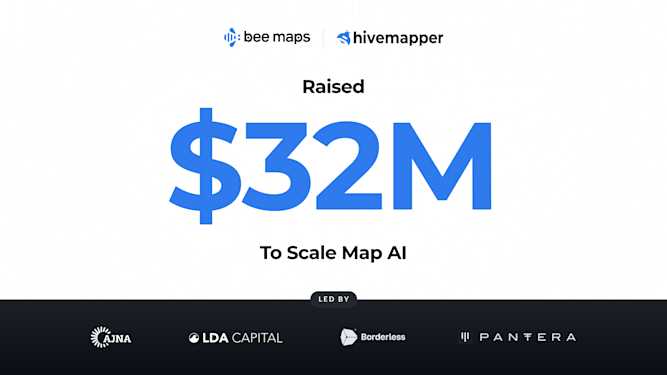

We built Bee Maps to make fresh map data a utility, not a walled garden. 36% of world roads mapped, data that's days to weeks old instead of months to years.

AI Event Videos is the same philosophy applied to training data. Action-rich clips, queryable at API scale, with optional deep sensor expansion for teams doing serious AI work.

If you're building world models, ADAS systems, or simulation infrastructure—and you're tired of the curation grind—get an API key and start querying. Or reach out and say hi.