The word "agent" has been co-opted by chatbots. Ask most engineers what an AI agent does and they'll describe something that writes emails, summarizes documents, or generates code. But there's a harder, more consequential version of the agent problem: building AI that operates in the physical world — observing streets, detecting objects, and making decisions at the speed of a vehicle moving through traffic.

That's what Bee Edge AI enables. It's a platform for deploying custom AI workloads directly on Bee cameras — running object detection and machine learning inference on onboard hardware instead of routing everything through the cloud. The result is a globally distributed network of programmable sensors that developers can task with virtually any visual detection or classification challenge.

Edge Compute, Not Cloud Latency

The traditional approach to camera-based AI follows a familiar pattern: capture frames, upload them to a server, run inference in the cloud, and return results. It works, but it's slow, expensive, and fragile. It depends on cellular connectivity. It burns bandwidth. And it introduces latency that makes real-time detection impractical.

Bee Edge AI eliminates that round-trip entirely. Each Bee camera carries a 5.1 TOPS neural processing unit that runs object detection and classification models directly on the device. Detections happen in milliseconds — while the vehicle is still in the scene, not minutes later when a cloud server gets around to processing the upload.

This isn't just a performance optimization. It changes what's architecturally possible. When inference is local, you can react to what you see. You can trigger immediate data captures based on detection results. You can run continuous classification without worrying about bandwidth budgets or API rate limits.

Hardware Built for the Task

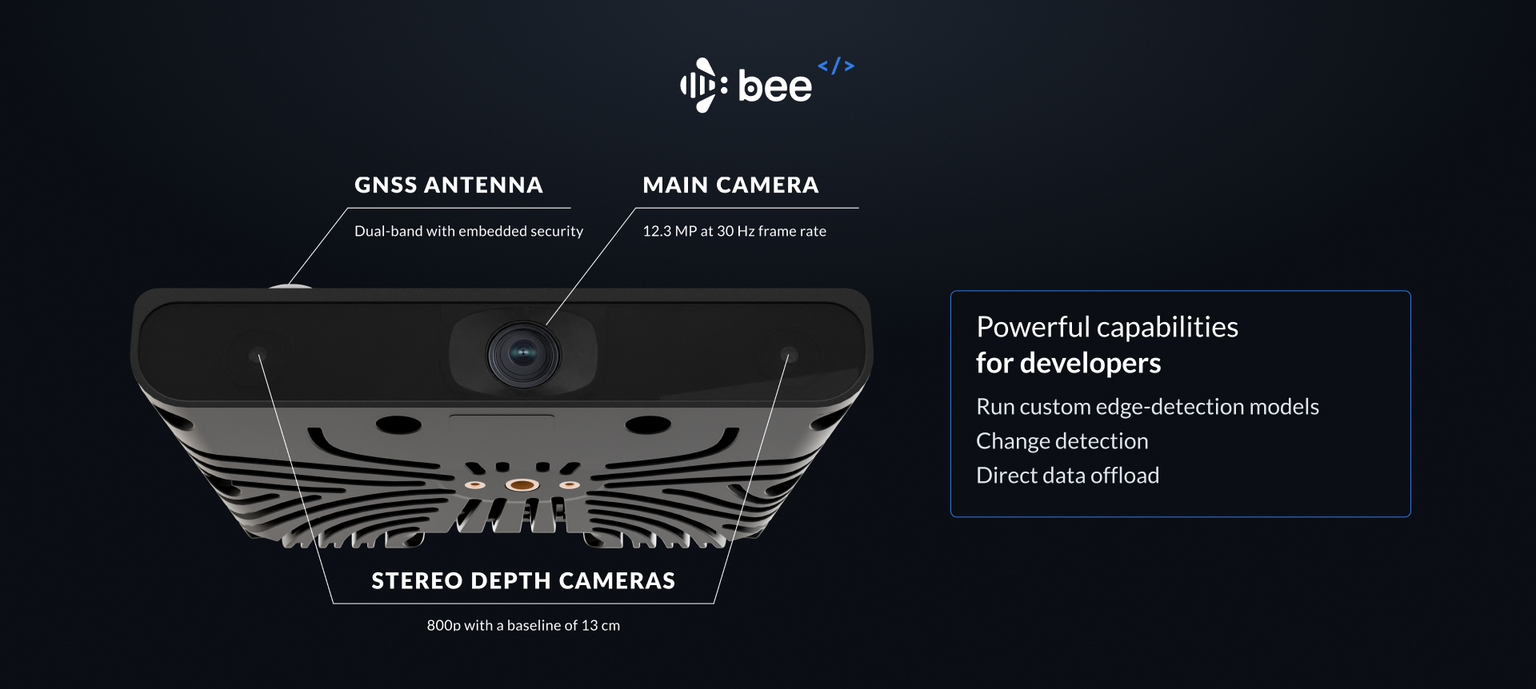

The Bee camera isn't a repurposed webcam with a compute module bolted on. It's purpose-built for mobile sensing:

| Component | Specification |

|---|---|

| Camera | 12.3MP RGB, 30 FPS |

| Depth | Stereo depth imagery for distance estimation |

| Compute | 5.1 TOPS NPU for on-device inference |

| Positioning | GPS, IMU, 6-axis accelerometer |

| Calibration | Device-specific calibration data for precise positioning |

| Connectivity | LTE for real-time streaming, WiFi for bulk transfers |

The stereo depth capability is particularly interesting. It means your models don't just know what they see — they know how far away it is. Combined with GPS and IMU data, every detection comes with precise geospatial context: where it was, when it was, and the exact position of the camera that captured it.

Deploy Like Software, Not Hardware

Here's where Bee Edge AI diverges from traditional edge computing. Most edge AI deployments mean shipping hardware, installing firmware via USB, and managing device-by-device updates. Bee Edge AI treats deployment like a software problem.

New AI modules deploy via over-the-air (OTA) updates through Bee Maps infrastructure. Write your model, package it, push it out — and every targeted device in the fleet picks it up automatically. No truck rolls. No field technicians. No firmware management.

This means you can go from idea to global deployment in hours, not hardware cycles. Found an interesting detection pattern in San Francisco? Deploy it to Tokyo by the end of the day. Need to update a model's weights based on new training data? Push an OTA update and every device in the fleet is running the new version by morning.

Geographic Precision

Not every AI workload needs to run everywhere. Bee Edge AI supports geographic targeting at multiple levels — countries, states, metropolitan areas, or individual cities. Devices automatically receive and execute modules only when operating in targeted zones.

This enables some compelling deployment patterns. You can run a construction zone detector only in regions where you have active customers. You can deploy a speed sign classifier in countries where you need speed limit data. You can target a specific corridor or intersection by defining a GeoJSON boundary.

The geographic system is dynamic, too. Devices that travel between regions automatically pick up and shed modules as they enter and exit targeted zones. A delivery fleet that operates across multiple states will run different detection workloads depending on where each vehicle is at any given moment.

Smart Data Routing

Edge inference generates data, and that data needs to get off the device. Bee Edge AI handles this with an intelligent routing system that matches payload size to connectivity:

- JSON detections (~bytes) — stream in real-time via LTE. When your model detects a speed sign, the structured result (class, confidence, GPS coordinates, timestamp) arrives at your endpoint immediately.

- Frame crops (~50KB) — deliver immediately over LTE. When you need a cropped image of a detection, it's small enough to send in real-time.

- Full 12.3MP frames (~2MB) — batch through WiFi. High-resolution captures queue up and sync when the device connects to WiFi, keeping LTE costs low.

This means you can design modules that stream lightweight detection metadata in real-time while batching the heavy imagery for later processing — getting the best of both immediacy and resolution.

Use Cases: What You Can Build

The platform supports two primary model types — detection models (localizing objects with bounding box coordinates) and classification models (binary or multi-class decisions about what appears in a scene). Here's what developers are building with them.

Retail and POI Monitoring

Every delivery vehicle, rideshare car, and fleet truck is already driving past thousands of storefronts every day. Bee Edge AI lets you turn those drives into a real-time business intelligence feed.

Deploy a classifier that detects storefront changes — "for lease" signs appearing in windows, new business signage replacing old ones, or "permanently closed" notices. The model runs on every frame, and when it spots a change, it fires a structured event with the business location, a cropped image, and the classification result.

The applications are immediate. POI database companies can detect new openings and closures weeks before they appear in any directory. Commercial real estate firms can monitor vacancy rates across entire metro areas without sending a single scout. Retail analytics platforms can track brand presence — noticing when a Starbucks becomes a Peet's Coffee or when a new franchise opens in a developing corridor.

Intersection Safety Analysis

Cities and transportation planners have long wanted better data about what actually happens at dangerous intersections. Traditional approaches — manual traffic counts, fixed cameras with limited fields of view — are expensive to deploy and maintain.

Bee Edge AI offers a different model. Define a GeoJSON boundary around target intersections, and every Bee-equipped vehicle that passes through captures multi-angle footage. Pair that with an on-device model that detects traffic lights, pedestrian crossings, and vehicle trajectories, and you get structured data about near-misses, red-light violations, and pedestrian conflicts — all collected passively by vehicles that were driving through anyway.

Because deployment is geographic and OTA, a city can spin up monitoring at a newly identified high-risk intersection in hours and decommission it just as quickly once the analysis is complete.

Edge Case Collection for Autonomous Vehicles

AV companies face a well-known data problem: the long tail. The common driving scenarios — highway cruising, normal lane changes, standard intersections — are well-represented in training sets. The rare but critical scenarios — a wheelchair user crossing mid-block, a child chasing a ball into the street, an oversized load on a rural highway — require millions of miles of driving to encounter naturally.

Bee Edge AI inverts this problem. Instead of driving millions of miles and hoping to encounter rare events, deploy a lightweight classifier specifically trained to identify them. Run it across thousands of vehicles in dozens of cities. When the model spots a pedestrian with a stroller, a wheelchair, an animal crossing, or an unusual vehicle, it triggers an immediate high-resolution capture with full sensor context — GPS, IMU, depth, and timestamp.

The result is a targeted pipeline for collecting exactly the training data that AV models need most, at a fraction of the cost of operating a dedicated data collection fleet.

World Model Training

Foundation model companies building world models — AI systems that understand how the physical world looks, moves, and changes — need vast quantities of diverse, synchronized sensor data. Not just video, but video paired with depth maps, IMU readings, and precise geolocation across different road types, weather conditions, lighting environments, and geographic regions.

Bee Edge AI can collect this data systematically. Deploy a module that captures synchronized frames, stereo depth, and IMU telemetry at defined intervals or when triggered by specific scene conditions. Target it globally, or focus on underrepresented regions — rural roads in Southeast Asia, mountain passes in the Alps, dense urban cores in West Africa.

The geographic targeting system means you can deliberately fill gaps in your training distribution rather than over-indexing on the same well-mapped corridors that every AV company has already driven a thousand times.

Infrastructure Monitoring

Road infrastructure degrades continuously — potholes form, signs fade, lane markings wear away, traffic signals malfunction. Municipalities typically discover these issues through citizen complaints or scheduled inspections, both of which introduce delays.

A fleet of vehicles equipped with Bee cameras and an infrastructure monitoring module can detect these conditions passively, every day, on every road they travel. Deploy a model that classifies road surface conditions, identifies missing or damaged signage, and flags construction zones. Each detection arrives as a structured event with GPS coordinates, a confidence score, and a supporting image.

Public works departments get a continuously updated map of infrastructure conditions across their entire road network — not from a dedicated survey vehicle that visits each road once a year, but from the commercial vehicles that are already driving those roads every day.

Developer Experience

Bee Edge AI is built for developers who want to ship, not fiddle with hardware. The SDK provides a local development workflow that feels familiar:

Build and iterate locally:

# Clone the SDK

git clone https://github.com/Hivemapper/bee-plugins.git

# Install dependencies

python3 -m pip install -r requirements.txt

# Build your module

bash build.sh my_module entrypoint.py

Test with fixture data — pre-built datasets from San Francisco and Tokyo let you validate your models against real-world imagery without needing a physical device:

python3 devtools.py -f sf # Load San Francisco test data

python3 devtools.py -f tokyo # Load Tokyo test data

Deploy to a device with hot-reload for rapid iteration:

python3 devtools.py -dI # Activate dev mode

python3 devtools.py -i myplugin.py # Deploy with auto-restart

python3 devtools.py -R # Restart the plugin service

Access device utilities for calibration, networking, and diagnostics:

python3 device.py -C > calibration.json # Extract calibration data

python3 device.py -Wi <ssid> -P <pass> # Connect to WiFi

python3 device.py -L # Switch to LTE

The workflow is: build locally, test with fixtures, deploy to a device, iterate with hot-reload, then push to production via OTA. No proprietary IDEs, no vendor-specific toolchains — just Python, a build script, and your models.

The Bigger Picture

The word "agent" is overdue for a reclamation. The most interesting agents won't live in chat windows or IDE sidebars. They'll be embedded in the physical world — mounted on windshields, driving through cities, continuously observing and understanding the environment at scale.

Bee Edge AI makes every Bee camera a programmable node in that network. It's not just a dashcam that records video. It's a sensor you can program — deploying new perceptual capabilities as easily as pushing a software update.

The physical world is rich with information that no one is systematically collecting. Storefront changes, road conditions, traffic patterns, infrastructure decay, rare driving events — all of it visible, all of it valuable, all of it going unrecorded because the tools to capture it at scale didn't exist.

Now they do. Build your agent. Deploy it to the fleet. Let the physical world become your dataset.

Follow us on X or Try Bee Maps for Free.