The Latest Buzz

There is nothing like a Bee.

For a few minutes, set aside everything you’ve ever known about maps.

Forget the desktop globe from your childhood bedroom, those paper maps you could never remember how to fold back up again. Forget when you first downloaded Google Earth, zooming out until you saw that blue-green marble floating in space. Forget the first time you used a smartphone app to navigate a foreign land where you couldn’t even read the signs – how you felt so lost, and yet so safe.

You’ve lived to see a few life-changing leaps in map technology. If you’re lucky, you will get a few more.

Here’s the thing about those leaps. They don’t come from corporate caretakers working to squeeze out a 1% improvement on what exists today. They come from people who are crazy enough to ask:

If we were rebuilding our maps today, how would we do it?

Starting from scratch today, we wouldn’t make the same decisions we made before. We wouldn’t design an elaborate sensor suite costing tens of thousands of dollars, install it on hundreds of cars, and pay workers to drive around collecting petabytes of data that goes stale as soon as it’s processed.

We wouldn’t teach tens of thousands of hobbyists to draw lines and boxes on satellite imagery in a web app. We wouldn’t count on them to fix the map as the world changes, getting no rewards for their dedicated work while trillion-dollar tech companies subtly repackage and sell the map they built.

These are today’s dominant mapmaking techniques. They made sense in an era when Cameras, Compute and Connectivity – the three C’s of modern mapping – were scarce. But it’s time for the next leap.

It’s time to tap into the latent capacity of the world’s 1.5 billion cars, which are increasingly outfitted with high-precision sensors, ample compute power and always-on connectivity. It’s time to use AI to extract map data, process it “on the edge” using distributed devices instead of paying endless server costs, and update the map in real time. It’s time to build maps passively from the billions of miles of driving that people already do everyday – and to reward them fairly.

That’s why we built the Bee.

It’s more than a dashcam. It’s the most efficient mapmaking machine ever made. It’s the culmination of everything we learned while building the Hivemapper Network into the fastest growing mapping project in history. It’s here to help the world’s leading mapmakers take their next big leap.

Keep reading, and you’ll understand why there is nothing like a Bee.

The trilemma of mapmaking

Ask any mapmaker what they consider the gold standard in street-level mapping, and they’ll give you the same answer: dedicated mapping vehicles. After the emergence of Google Street View in the mid-2000s, the entire industry followed Google’s lead and built their own mapping fleets.

It’s a reliable, time-tested approach. But it’s wildly expensive. Between the vehicle, specialized sensors, driver wages, fuel, maintenance, insurance and other administrative costs, maintaining a mapping fleet costs hundreds of thousands of dollars per vehicle, per year. Building a large enough fleet to consistently refresh map data for all 60 million km of roads in the world would cost billions of dollars a year. Forever.

At this price tag, even a trillion-dollar company like Google can only justify collecting ground-truth map data every year or two. No wonder mapmakers are so eager to reduce their reliance on mapping fleets.

The dream solution – the holy grail of modern mapping – is high-quality, crowdsourced data. And there are basically three options for getting it. The first is to tap into smartphones. The second is to rely on specialized consumer devices, such as dashcams. And the third is to rely on sensors built directly into cars.

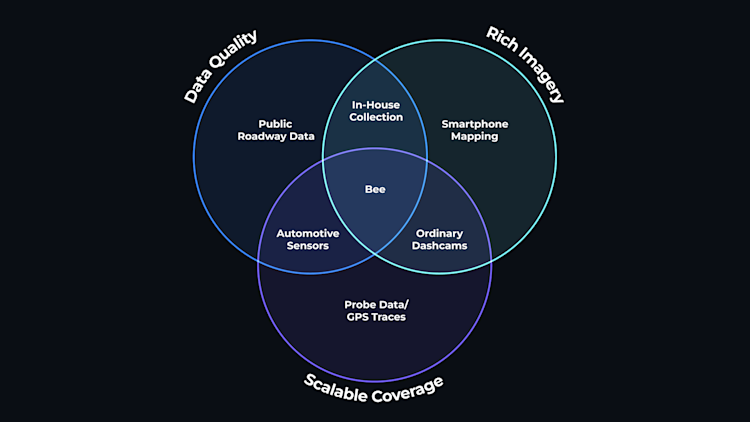

Until now, this has been a trilemma. Building accurate maps requires rich imagery, consistent data quality and scalable coverage. Pick two, because with any of these three approaches, you’d have to sacrifice the third.

All-purpose consumer sensors (smartphones)

Strengths

Scalable Hardware

– Low barrier to entry for contributors. Everyone has a smartphone these days.

– Low barrier to entry for developers. No design or manufacturing headaches.

Weaknesses

Inconsistent Quality

– GPS receivers and antennas lack mapping-grade precision. No option for a stereo camera.

– Smartphones use a wide range of lenses and image settings, making AI models ineffective.

– As a result, smartphones can detect map data, but cannot precisely position it.

Not Passive Enough

– People need to remount their phone every time they get in the car.

– Phones tend to overheat while mapping, especially in warm temperatures.

– User friction has repeatedly caused smartphone-based projects to lose momentum.

Bottom Line

Because of the significant friction of mapping with smartphones, no smartphone-based project has been able to consistently refresh map data at scale. In the best-case scenario, early enthusiasts have “completed the map” for certain areas, then lost momentum. For this reason, several projects that started out with generic smartphones have gravitated toward requiring specific devices, or even developing hardware.

Specialized aftermarket sensors (dashcams)

Strengths

Scalable Business Model

– Hundreds of millions of drivers around the world use a dashcam for personal or business reasons. In theory, tapping into them for map data should have little incremental cost.

Weaknesses

Quality Problems

– Consumers aren’t willing to pay extra for mapmaking-grade components, so manufacturers are forced to compromise on hardware. As a result, ordinary dashcams are plagued by poor positioning and insufficient compute power to reliably extract map data on the edge.

Legal Problems

– Some dashcam manufacturers monetize map data from contributors without adequate notice or compensation, and cut corners on privacy and data protection compliance.

Bottom Line

Some dashcam companies have built a pipeline for collecting a significant amount of map data, but mapmakers place a low value on these “detections” due to inconsistent data quality. Some mapmakers have chosen to avoid these suppliers altogether to protect themselves from legal and reputational risk.

Automotive edge detections

Strengths

Scalable Business Model

– Millions of cars have ADAS modules with the potential to enable passive map data collection. In theory, tapping into them for map data should have little incremental cost.

Automotive-Grade Quality

– Using computer vision to operate safety-critical functions in a moving car also requires high-quality cameras, precise positioning and ample compute power. As a result, there is excellent overlap with the hardware needed for map data collection.

Weaknesses

Lack of Imagery

– Imagery is critical for auditing and validating map data, but most automakers have been unwilling to grant access to vehicle imagery and have restricted the bandwidth available via in-car telematics to upload map data to the cloud.

Bottom Line

One of the leading ADAS providers has scaled this approach to millions of vehicles, generating a firehose of “detections” that can be accessed by mapmakers. But without imagery to establish the reality on the ground and without a flexible over-the-air update process for improving models and adding mapping capabilities, mapmakers have been unable to replace their own map data collection efforts and have continued to hunt for richer data sources.

–

We designed the Bee to solve the trilemma. It has rich imagery. It has higher quality data than any smartphone or ordinary dashcam. And it offers a scalable model for achieving full global coverage.

–

The finest minds in mapping are always looking ahead to the next exciting innovation. Among them is industry savant James Killick, a veteran MapQuest, ESRI and Apple Maps executive whose Map Happenings blog is widely read by industry insiders. Before all that, he helped launch Etak, the first in-vehicle navigation system.

Looking at mapping from first principles, he has pointed to three critical elements for the next revolutionary change in how humanity makes maps:

1. Eyes

2. Smart processing

3. Streaming

By “eyes,” Killick means cameras. Thanks to falling hardware prices, there are tens of billions of cameras in the world today. Modern cars are one of the main drivers of this growth; a Tesla Model 3 has eight exterior cameras, including three forward-facing cameras looking at the road ahead. The dedicated mapping vehicles employed by legacy mapmakers can’t possibly keep up with that pace of data collection, Killick argues.

“They only drive the streets about once a year — if you’re lucky,” he wrote in a 2023 article. “Sorry guys, but that doesn’t cut it for revolutionizing map production.”

Of course, eyes aren’t enough. Although cloud computing costs continue to fall, constantly collecting imagery for every road in the world would be prohibitively expensive. The answer, Killick says, is “smart processing.” In practice, that means using advanced AI to extract the elements of the world that are useful for mapmaking – and nothing else. That’s critical for privacy and data protection, as well as cost efficiency.

And once a mapmaker can efficiently extract intelligence on the edge, it needs a way to quickly relay that information to map users. What customers want, Killick says, is “a ‘living map’ with almost zero latency.”

Instead of eyes, smart processing and streaming, we at Bee Maps refer to these requirements as the three C’s of modern map data collection:

Cameras

Compute

Connectivity

The industry remains in transition, trying to find a middle ground between the scale of crowdsourced data and the quality of in-house collection. And the first mapmaker to master this process is the one that will have the competitive edge for the next wave of navigation products.

“Bee Maps is clearly showing leadership in mapmaking technology,” Killick says. “But they’re also innovating in business models. Legacy mapmakers should wake up and pay close attention.”

Efficient, cutting-edge Map AI

As is the case in many fields, AI is transforming map data collection.

Spatial vision technology is improving rapidly, and cutting-edge mapmakers use it whenever possible to replace the repetitive work of reviewing imagery and manually updating maps. That saves on labor costs, of course. And when done on distributed devices (“on the edge”), it also drastically reduces the amount of imagery being stored on centralized servers, which helps with privacy and data protection.

We at Bee Maps call this Map AI. At a high level, here’s how Map AI works:

1. The Bee collects 30 fps video for personal use, which enables Map AI to dynamically process the appropriate number of frames to extract useful map data – and no more. For example, there is no reason to process thousands of duplicative frames while a car is parked or sitting at a traffic light.

2. All selected frames go through privacy blurring prior to Map AI processing. All bodies and vehicles detected are irreversibly blurred to help protect the privacy of bystanders. (For a full rundown of privacy policies and protections on the Hivemapper Network, click this link.)

3. Map AI uses a custom-tuned version of the YOLOv8 computer vision model to detect relevant map features such as traffic lights and road signs in the privacy-processed keyframes.

In this image, computer vision is used to detect potential map features on the edge from privacy-blurred imagery.

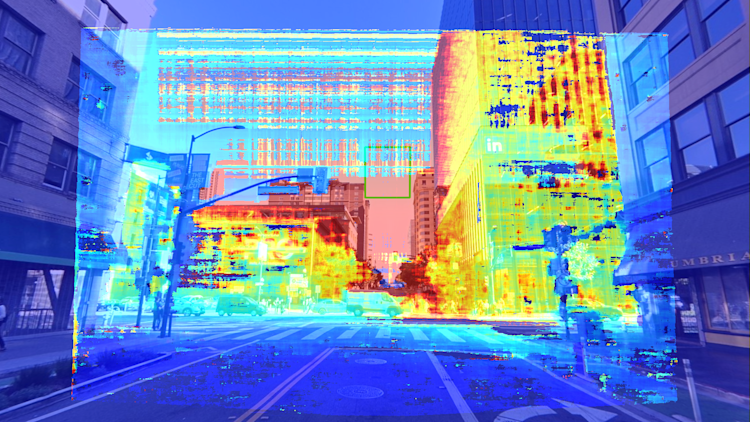

4. By comparing the two images from the Bee’s stereo cameras, Map AI uses a technique called reprojection to estimate a depth map of every pixel in the images taken by the central RGB camera.

5. By combining the Bee’s high-precision GPS coordinates, the Bee’s orientation as determined by IMU readings, and the depth map created in step 4, the Bee estimates the precise lat/lon and size of each of the map features detected with computer vision in step 3.

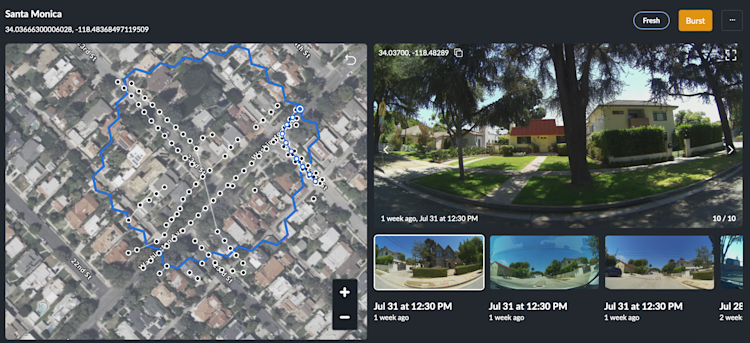

6. Bee sends the Hivemapper Network a small data packet with the precisely positioned map features from step 5, allowing the end customers of map data to detect changes in close to real time.

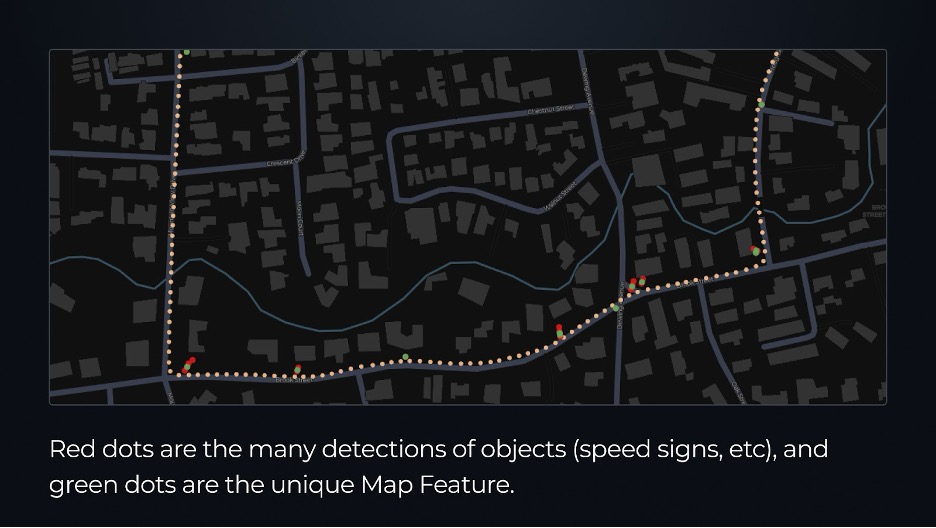

In this image, yellow dots represent keyframes taken every 8m along the drive path. Red dots represent individual detections of an object, and green dots represent unique “map features” generated by de-duplicating any repeated observations of the same object. For a given Map Feature, a Bee device may produce 3 to ~30 object detections before conflating them into a single unique Map Feature that is then uploaded and added to the global map.

7. A human-in-the-loop auditing and quality assurance pipeline ensures that map features have been correctly classified, positioned and deduplicated.

At the end of this process, customers can access what we call Map Features – unique elements of the map. Ultimately, if distributed sensors see the same stop sign 1,000 times, you don't need to hear about it 1,000 times. You want to know if an element of a map appeared, changed, or disappeared. That’s it.

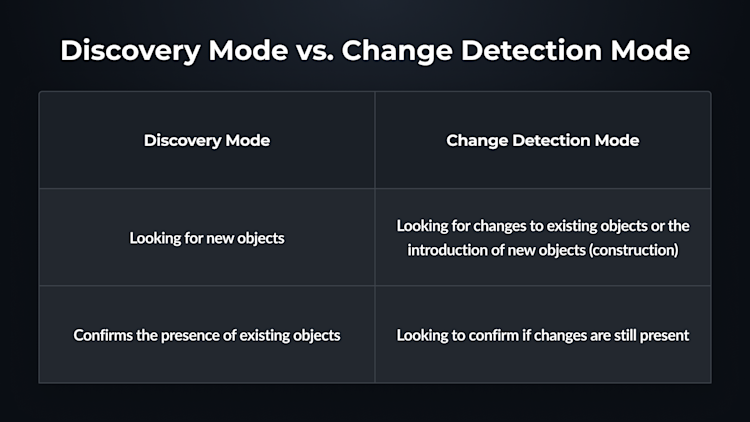

Discovery mode vs. change detection mode

The Bee has two primary mapping modes. Discovery mode focuses on ensuring that we have successfully mapped all of the objects (speed limit sign, stop sign, turn restrictions, lane definitions, etc.) For example, at a simple intersection where we have only 1 or 3 stop signs we are clearly still in Discovery mode as either an intersection has 2 or 4 stop signs. Once we have all 4 stop signs at that intersection, then the Bee switches to Change Detection Mode where it’s looking for changes that would alter this intersection e.g. — closed road, switch to roundabout, etc. The Bee operates with the relevant sections of the map, so it can very quickly determine what has changed versus its existing map.

The capabilities of different mapping approaches

We designed the Bee dashcam to run Map AI better than any other device on the market. It has better positioning, more consistent data quality and a more passive user experience than a typical consumer device. And unlike the ADAS modules in modern cars, Map AI offers access to imagery as well as a standard stereo camera to enable more precise and computationally efficient 3D reconstruction of map features.

In the following sections, we’ll go deeper into how the Bee unlocks Map AI at scale.

Imagery you can trust, and use to train AI

Every year, mapmakers become more reliant on probe data from cars, smartphones and other sensors.

They rarely have access to imagery to confirm the truth on the ground. And without imagery to document edge cases, they can’t train models to do a better job of deciphering the truth of the world – which means many of these sources of probe data remain frozen in time with outdated AI models. Bee Maps, however, can flash a simple firmware update so that Map AI gets better and better every month.

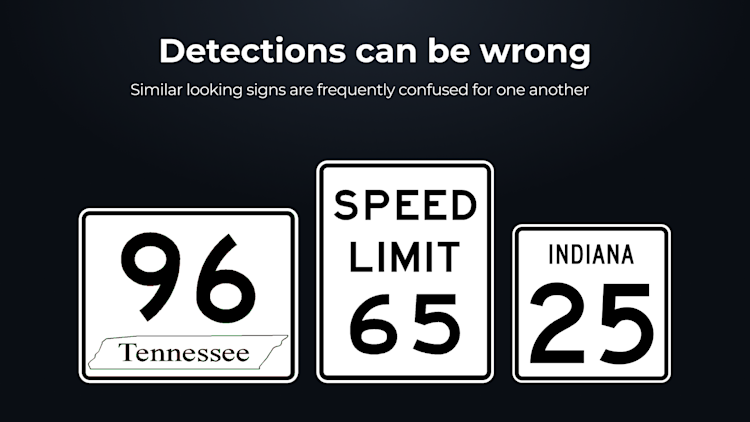

We humans also need a way to audit maps and make sure they’re accurate and safe. We can’t let our maps become an AI-generated black box that’s prone to hallucinations and errors.

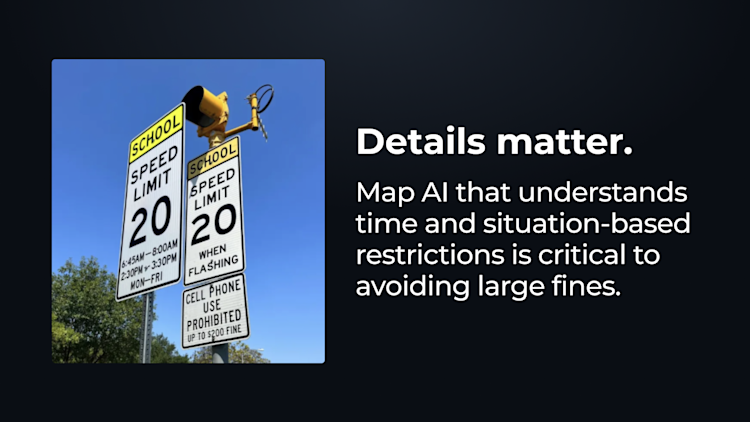

With the Bee, map data customers have access to rich, consistent imagery to back up the metadata generated by Map AI. They can choose between a full, privacy-blurred image and a thumbnail of the map feature that was detected. Here are some examples of why having access to imagery is so critical:

Detections can be incomplete. For example, detecting a speed limit sign that applies to a school zone isn’t very useful, if it only applies during specific hours written in small font and you can’t read them.

Detections can fail to provide context. For example, temporary signs often look the same as permanent ones. Detecting a sign isn’t that useful for mapmaking if the sign is only present for a limited time while a construction crew is working. This is obvious to a human eye, but difficult for AI.

Detections can be simply wrong. For example, Tesla owners regularly share examples where computer vision errors cause the Full Self Driving system to adhere to the wrong speed limit. There are endless edge cases where computer vision will fail to provide the right result, but a trained human would.

Dynamic Signs. More of the road signage around is becoming digital and dynamic. For example, toll prices for express lanes change automatically based on road conditions. While reading this as a simple highway sign is fine, the value comes from Map AI’s ability to read and understand the actual toll prices on the edge.

If your map is only updating every 30 to 45 minutes with new or changed road signage that is going to be far too slow to keep pace with dynamic signs.

Cameras for mapping, not for selfies

It’s not enough to have just any imagery; Map AI requires the right kind of imagery.

People often look at the beautiful imagery from a modern smartphone and assume it’ll work for mapmaking. But that beautiful image isn’t showing the true face of the world; it’s showing a version with makeup applied.

Different cameras use different stabilizers and distortion parameters on the lenses. They use different digital filters. If you capture data with different devices at the exact same location, you’ll get different color, contrast and distortion – all of which must be corrected for good computer vision performance. Accepting a wide range of cameras means validating every device and standardizing the data. It’s a boondoggle.

Great map data isn’t based on the number of lenses or megapixels. It isn’t about generating the most beautiful picture to human eyes. It’s about consistency and precision in capturing the condition of the real-world objects that comprise a map.

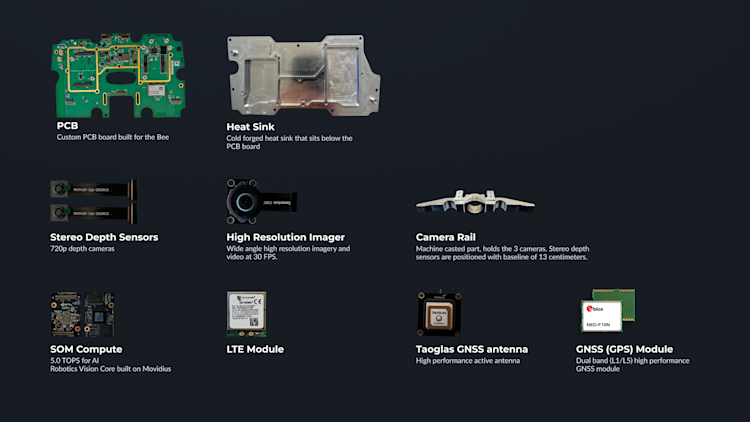

Pullout box: Computer vision on the Bee

Central RGB Camera: 12.3 MP at 30 Hz frame rate

Stereo Depth Cameras: 720p with a baseline of 13 centimeters

Computer Vision Module: Luxonis OAK-SoM-Pro S3 64GB, custom designed for Bee

The stereo camera combined with DepthAI software is one of the critical differences between the Bee and any other crowdsourced map data collection platform. Stereo cameras are common in automotive ADAS modules. You’ll typically find them mounted behind the rear-view mirror, watching the road ahead and gauging distance like human eyes do.

Most modern smartphones have multiple camera lenses, but they’re squeezed together into a small package. Without physical separation – the “baseline” distance – they can’t efficiently compare images to triangulate objects. There are techniques for gauging depth from multiple images taken by a single camera in a continuous sequence, but it’s far less accurate and far more computationally intensive than stereo vision.

Bee has a stereo camera that has been carefully calibrated for optimal performance inside a fast-moving vehicle. It works using a technique called reprojection.

First, we use GPS data from the Bee to get an accurate estimate of the current position of the dashcam, and combine that with IMU data to understand yaw, pitch and roll relative to the moving vehicle.

Once we know the precise location and orientation of the dashcam, we turn to the depth cameras to triangulate the locations of objects in the real world. By combining the left and right images, the Bee calculates a “disparity map” – an estimated difference between the same pixel on each image – to estimate the distance of each pixel away from the dashcam. We take the distance estimates for each pixel, and using a calibration matrix, reproject those distance estimates on the RGB image coming from the central camera.

That leaves us with an image like the one below.

Beyond the lenses, the Bee’s mapmaking performance comes from a custom-designed version of the Luxonis OAK-SoM computer vision module that was specifically designed for running low-power, high-performance, AI and depth perception tasks in real time. It’s a workhorse, capable of generating consistent map data all day and night at highway speeds without breaking a sweat.

Modern smartphones have impressive processing specs on paper. But they weren’t designed to perform these kinds of specialized computer vision tasks for hours on end without overheating. (Before getting there, a smartphone will get aggressively throttled by its operating system anyway.)

As the great Alan Kay said: “People who are really serious about software should make their own hardware.” We have. And it makes all the difference.

Inside the Bee

The Bee may look like a dashcam, but no smart dashcam manufacturer would select the components we use in the Bee for their dashcam — it would be overkill for the purposes of a dashcam. Said differently, if you are just building a dashcam for safety capabilities like Samsara then you simply don’t need many of the components used in the Bee.

Below is a partial list of components used in the Bee.

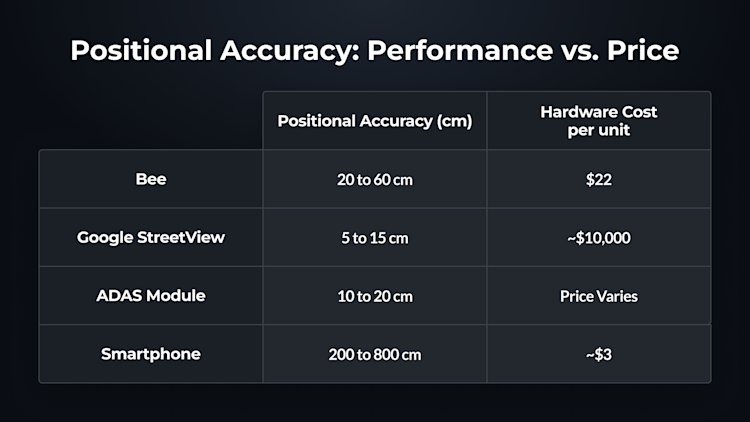

Automotive-grade positional accuracy

Positioning is one of the most important technical challenges in crowdsourced map data collection, and there’s no simple answer. The most precise positioning solutions are too expensive to be scalable. The most scalable positioning solutions aren’t precise enough to be reliable.

Mapping fleets typically use a military-grade positioning hardware that can cost tens of thousands of dollars per device, like this combined GPS/INS system from Hexagon | NovAtel. It’s extremely precise, it’s a battle-tested solution … and it’s simply too expensive to deploy at scale.

Smartphones fall on the opposite end of the spectrum. With the relatively forgiving positioning demands of consumer apps, smartphone manufacturers tend to spend just a few dollars on a basic receiver and antenna that they can fit into a compact form factor. Often, they compensate by tapping into other data sources, such as cell towers and known WiFi networks to try and get within a few meters.

That’s usually good enough for turn-by-turn navigation – but not for mapping.

We designed the Bee with a high-end u-blox GPS module that no smartphone or dashcam manufacturer would ever prioritize in their budget. We chose a 25*25*8mm Taoglas antenna with a 70*70mm ground plane that no smartphone or dashcam manufacturer would ever consider for packaging reasons.

Antenna size is an underappreciated element of positional accuracy; the Bee makes this a priority in a way that other devices simply cannot.

Pullout box: positional accuracy on the Bee

GPS module: u-blox NEO-F10N

Dual-band (L1/L5) receiver

Multipath mitigation for urban accuracy

Embedded security

GPS antenna: Taoglas AGVLB.25B.07.0060A

Dual-band (L1/L5) active antenna

25*25*8mm dual-stacked ceramic patch

70*70mm ground plane

This hardware suite is capable of supporting complementary services such as real-time kinematics (RTK), post-processing kinematics (PPK) and next-generation technologies for triangulating position by comparing GPS signals from multiple roving devices in the same area.

Positioning software

But hardware is just one piece of the puzzle. No service that relies on real-time connectivity or a view of the sky can solve the toughest challenges:

- Urban canyons between tall buildings, which have an impeded view of satellites

- Underground locations such as tunnels and parking garages, which have no view of satellites

- Above-ground covered locations such as airport decks and parking structures

Software is critical for solving these challenges. That’s why the Bee harnesses sensor fusion spanning IMU data, dead reckoning and visual positioning to complement its best-in-class GPS hardware.

Given that the Bee is mounted in a wide range of vehicles from small sedans to 18 wheeler trucks, it’s critical that we establish where precisely on the vehicle the Bee is mounted. We leverage sensor fusion, visual odometry, and machine learning to determine the precise position and articulation of the device, including its height and orientation on the vehicle.

When you work with the Bee, you’re getting a commitment to the best positioning from a crowdsourced mapping project instead of tradeoffs that will prevent the network from scaling to its full potential.

Conclusion: Connected maps, as dynamic as the world

Every mapmaker dreams of a dynamic, real time map. One where changes in the world are immediately detected in real time and used to update maps for safer, faster navigation.

Processing imagery to find those changes is a bit like searching for a needle in the haystack. But today, that’s what many mapmakers still do. Dedicated vehicles trawl the world, collect massive amounts of data, and bring it back for parsing and processing to build maps.

Bee Maps got its start by fitting into this mold, providing street-level imagery that can be fed into these data pipelines and transformed into usable map data. Cloud providers like AWS and Google Cloud love this, as it means an ever-growing appetite for storage and compute, at an ever-growing cost. But the mapping industry is changing. Increasingly, forward-looking mapmakers recognize that rather than consuming massive amounts of imagery and sifting through it to detect the relevant features of a map, the better approach is to generate map features on the edge.

Imagery is still important for map editing that AI cannot handle. It’s important for validating map features - because without a thumbnail or full image, it’s impossible to be confident that computer vision got it right. But generating map features on the edge requires the right hardware. It requires something like the Bee.

After months of prototype testing, we’ve cleared the Bee for mass production starting in Q4 2024. Units will be available for current and prospective customers to test. Just contact us at [email protected].

With tens of thousands of units on preorder, we expect the Bee to account for the vast majority of active mapping devices on the Hivemapper Network by the middle of 2025. While the original HDC and HDC-S dashcams will continue to provide imagery, the ramp-up of the Bee will supplement that with map features generated on the edge, moving the mapping industry to a more efficient, real-time streaming model.

If you’re interested, join us for a live event in San Francisco this December, where you’ll be able to see the Bee in action and meet the development team.

Share Post